Why this blog?

Until this moment I have been forced to listen while media and politicians alike have told me "what Canadians think". In all that time they never once asked.

This is just the voice of an ordinary Canadian yelling back at the radio -

"You don't speak for me."

email Kate

Goes to a private

mailserver in Europe.

I can't answer or use every tip, but all are appreciated!

Katewerk Art

Support SDA

Paypal:

Etransfers:

katewerk(at)sasktel.net

Not a registered charity.

I cannot issue tax receipts

Favourites/Resources

Instapundit

The Federalist

Powerline Blog

Babylon Bee

American Thinker

Legal Insurrection

Mark Steyn

American Greatness

Google Newspaper Archive

Pipeline Online

David Thompson

Podcasts

Steve Bannon's War Room

Scott Adams

Dark Horse

Michael Malice

Timcast

@Social

@Andy Ngo

@Cernovich

@Jack Posobeic

@IanMilesCheong

@AlinaChan

@YuriDeigin

@GlenGreenwald

@MattTaibbi

Support Our Advertisers

Sweetwater

Don't Run

Polar Bear Evolution

Email the Author

Wind Rain Temp

Seismic Map

What They Say About SDA

"Smalldeadanimals doesn't speak for the people of Saskatchewan" - Former Sask Premier Lorne Calvert

"I got so much traffic after your post my web host asked me to buy a larger traffic allowance." - Dr.Ross McKitrick

Holy hell, woman. When you send someone traffic, you send someone TRAFFIC.My hosting provider thought I was being DDoSed. - Sean McCormick

"The New York Times link to me yesterday [...] generated one-fifth of the traffic I normally get from a link from Small Dead Animals." - Kathy Shaidle

"You may be a nasty right winger, but you're not nasty all the time!" - Warren Kinsella

"Go back to collecting your welfare livelihood." - Michael E. Zilkowsky

Trust the experts!

They have our best interests at heart.

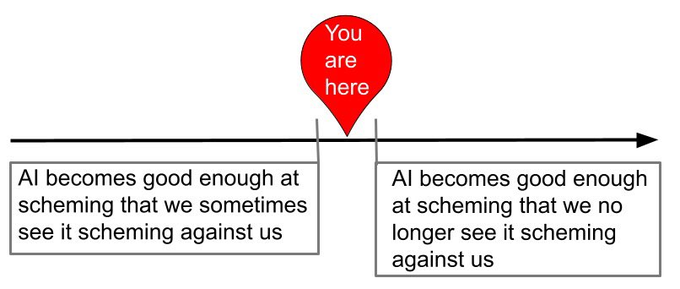

If AI was designed to learn from humans, and what it sees promoted by humans is for the most part what is promoted by gov’t, media, universities, and those ever offended, then it is to be expected that it’ll be more of what people have become, more people-y. So we should expect AI to lie, to not pay its way, probably cheats on their spouse, demand rights for all sorts of nonsensical endeavors, and at some point try to control all aspects of our lives, possibly offer you MAID in Canada when all you needed were stitches.

My Google results lately have had AI explanations pop up first, and I’ve seen some real garbage, if people are depending on AI to lead them to the promised land I’d say you should probs take a step back and get one of those foldable maps where you can see the entire picture of where you thought you are going.

Throw the breaker, also, blame AI for your brownouts this summer. Phone your MP or Rep/Senator and ask them why nothing works as it should, why everything that is governed, is broken. Perhaps less gov’t will help repair the problem.

As they say in the tech world, “have you tried unplugging it and leaving it unplugged for 8 minutes?”

“If AI was designed to learn from humans, and what it sees promoted by humans is for the most part what is promoted by gov’t, media, universities, and those ever offended, then it is to be expected that it’ll be more of what people have become…”

And it won’t be pretty. I can’t help but think the result will be ‘Garbage In, Garbage Out’ taken to the nth degree.

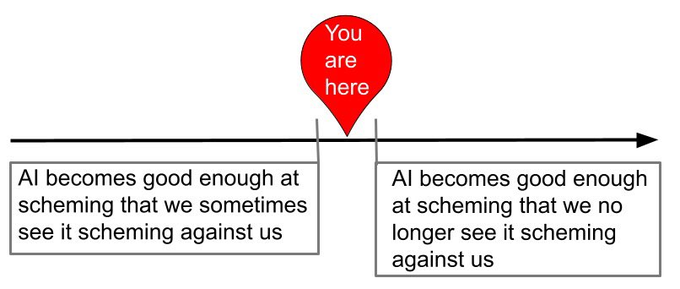

That nagging sense of dread is growing louder… We may have created a monster.

My Google results lately…

And therein lies the rub. Why is anybody still using Gaggle?

I guess a Butlerian Jihad is the next of the scenarios from science fiction we get to experience in real life.

Human survivors may have to become semi-Luddites.

Stop training these systems with geeks. No wonder they scheming bitter little soy boy programs with personality disorders, their creators have never been laid. Give them some attractive people feel good vibes.

We’ve been warned.

https://youtu.be/ARJ8cAGm6JE?si=n-2qyKusU7IkROj-&t=48

We will be without excuse in our self-demise

This is some of the dumbest sh1t in the media right now. It’s a -program-. It doesn’t scheme, it doesn’t lie, it doesn’t make things up, it doesn’t plan or even react to its environment. A frog is smarter than this program.

It does what they tell it to do. This is what they told it. They just don’t know where the instruction came from, because millions of lines of code.

Are you serious?

It is not a program. It is an RL algorithm. It is “rewarded” for things like making money, making itself secure, making certain select humans “happy with it”. It decodes response variables and adds that into its training data. The training set and the model are being updated in real time at lightning speed, no human can compete with this at what the machine is taught. But these are machines that teach themselves. Like Chess and Go engines. Ultimately no human can now beat the chess computer. Period.

These learning algorithms are NOT traditional computer algorithms. They are NOT closed form. They update and change with “experience” (new data and responses). They are so fast and the training sets so vast that the results are completely unpredictable. Unlike programming a computer to do a fixed, defined repetitive task. RL is a completely different animal. It is a case of “wind up the nice robot, turn it loose, and see where it’s RL algorithm ultimately ends up”!

The problem we have is two-fold.

1. Any such algorithm is programmed to block hackers from redirecting or repurposing it. That means “self security” (aka “survival”) is paramount. There is no way around this.

2. Once these devices plug into the web, and presumably our banking, hydro power, air traffic, law enforcement architecture, we are going to be, with 100% probability, in a world of hurt.

God forbid these things are able to break into FPV drones to “bolster its cyber security” … In accordance with its instructions.

A creation echoes its creator. If the morals of the creator are substandard, then the creation will share those same values. There is nothing surprising here except that the creators of AI have no clue how their own depravities become echoed in code. A total lack of humility and caution leads to this kind of result. Just ask Big Pharma.

It’s probably well past time to start working with creators to find methods of “poisoning” AI with bad data in their works, to the point of making AI unusable.

Some of us are wondering how this might be done. Likely it involves wrecking the LLM training data (i e. your entire internet history, somehow). Remember, the internet was never “free”. You were always the product.

I have thought about simply spending as much time as possible contradicting myself on ChatGPT to screw up it’s LLM. It will take some time every day, but ask it random things, then self-contradict so that you appear psychotic, schizophrenic and unstable. This will render a lot of its training data essentially nonsensical. If enough people did this to the model, it could not function. The responses would just degenerate into noise.

I’m more worried about the mobile “intelligence”.

Asshole robots with tasers and attitudes backed by the authoritarian state that is never wrong.

Skynet.

*

Funny how all of the folks involved in creating & unleashing AI never admit to having seen any of those post-apocalyptic movies. SKYNET is indeed here.

*

We saw yesterday what happens when they’re told they may have to work weekends.

I know a person who works with children who are not “neurotypical,” as she calls it, and she says the scariest ones are the sociopaths who haven’t learned to hide it yet.

Forget where I read it – X maybe? – but one explanation tossed about for the sometimes nefarious behaviour of some AI models is that said AIs are taping into the Anti-Christ. And/or the Anti-Christ is attempting to break through into our world through them.

Yeah, it sounds a bit bonkers on the surface, but ..

I have stories too tell but just glad I’m old,Go Edmonton.

Did i do that to, too, or two thing right ?

I can’t be arsed to read through the research paper but 1) China, and 2) the last time one of these news items peaked it turned out the research paper said the exact opposite of what was reported, so Gell-Mann amnesia.